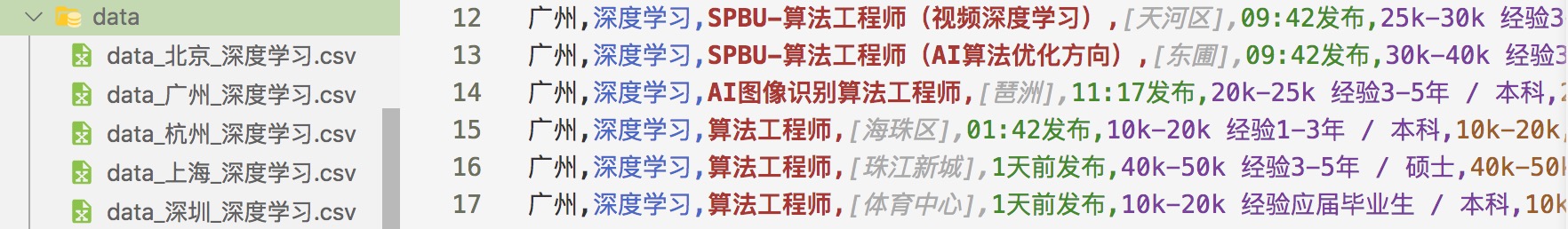

一、数据准备

爬虫的时候还遇到点问题,之前调用拉勾网 positionAjax.json 接口非常好用,现在尝试各种请求都返回请求频繁,但状态码是200,也就是接口还是收到了请求,只是因为某项没有符合所以不给数据,用浏览器访问也正常,并不是字面的‘请求频繁’的问题,猜测可能是做了客户端ip限制,访问的不是部署的ip即算作是外部爬虫,所以选择用selenium解决。

用多线程暂时没有遇到封ip的问题,如果有可以找一些开放的代理ip解决。

完整代码如下:

from selenium.webdriver.chrome.options import Options

from selenium import webdriver

import pandas as pd

import time

from pandas.tseries.offsets import Day

import requests

import os

from threadpool import ThreadPool,makeRequests

from concurrent.futures import ThreadPoolExecutor, wait, ALL_COMPLETED, FIRST_COMPLETED

import threading

import datetime

import json

def main():

chrome_options = Options()

# chrome_options.add_argument('--no-sandbox') # 解决DevToolsActivePort文件不存在的报错

# chrome_options.add_argument('window-size=1920x3000') #k 指定浏览器分辨率

# chrome_options.add_argument('--disable-gpu') # 谷歌文档提到需要加上这个属性来规避bug

# chrome_options.add_argument('--hide-scrollbars') # 隐藏滚动条, 应对一些特殊页面

# chrome_options.add_argument('blink-settings=imagesEnabled=false') # 不加载图片, 提升速度

# chrome_options.add_argument('--headless') # 浏览器不提供可视化页面. linux下如果系统不支持可视化不加这条会启动失败

keyword_list=[

'深度学习'#,

# '算法',

# '数据挖掘',

# '自然语言处理',

# '计算机视觉',

# '推荐算法',

# 'python',

# '语音识别',

# '数据分析'

]

base_url="https://www.lagou.com/jobs/list_{keyword}/p-city_{code}?&cl=false&fromSearch=true&labelWords=&suginput="

selected_city=['北京','上海','广州','深圳','杭州','武汉','南京','成都','长沙']

selected_codes=['2','3','213','215','6','184','79','252','198']

# //*[@id="s_position_list"]/div[2]/div/span[7]

# //*[@id="tab_pos"]

pool = ThreadPool(5)

param=[]

for i,selected_code in enumerate(selected_codes):

# 依次遍历城市URL

for word in keyword_list:

driver = webdriver.Chrome(options=chrome_options)

param.append(((i,selected_code,word,driver),None))

reqs = makeRequests(get_detail,param)

[pool.putRequest(req) for req in reqs]

#wait(t, return_when=ALL_COMPLETED)

pool.wait()

#pool.close()

file_list=os.listdir('./data')

if os.path.exists('./data.csv')!=False:

os.remove('./data.csv')

for n in file_list:

data=pd.read_csv('./data'+'/'+n+'.csv')

data.to_csv('./data.csv',mode='a',index=None)

def get_detail(i,selected_code,word,driver):

base_url="https://www.lagou.com/jobs/list_{keyword}/p-city_{code}?&cl=false&fromSearch=true&labelWords=&suginput="

selected_city=['北京','上海','广州','深圳','杭州','武汉','南京','成都','长沙']

selected_codes=['2','3','213','215','6','184','79','252','198']

output=[]

city_detail_url = base_url.format(code=selected_code,keyword=word)

# 首页

driver.get(city_detail_url)

if driver.find_element_by_xpath('/html/body/div[7]/div/div[2]')!=None:

driver.find_element_by_xpath('/html/body/div[7]/div/div[2]').click()

count=driver.find_element_by_xpath('//*[@id="tab_pos"]').text

page=driver.find_elements_by_xpath('//*[@id="s_position_list"]/div[3]/div/span')[-1]

loop=True

while loop:

time.sleep(2)

city_list = driver.find_elements_by_xpath('//*[@id="s_position_list"]/ul/li')

for city_detail in city_list:

item = {}

info_primary=city_detail.find_element_by_xpath('.//div[1]/div[1]')

info_company=city_detail.find_element_by_css_selector('.company')

info_pic=city_detail.find_element_by_xpath('.//div[1]/div[3]')

info_type=city_detail.find_element_by_css_selector('.list_item_bot .li_b_l')

info_fuli=city_detail.find_element_by_css_selector('.list_item_bot .li_b_r')

item['cityname'] = selected_city[i]#info_primary.find_element_by_xpath('.//p/text()').extract()[0].split(" ")[0].strip()

item['keyword'] = word#info_primary.find_element_by_xpath('.//p/text()').extract()[0].split(" ")[0].strip()

item['title'] = info_primary.find_element_by_xpath('.//div[1]/a/h3').text

item['area'] = info_primary.find_element_by_xpath('.//div[1]/a/span').text

item['publishdate'] = info_primary.find_element_by_xpath('.//div[1]/span').text

item['salary'] = info_primary.find_element_by_xpath('.//div[2]').text.split(' ')[0]

item['experience'] = info_primary.find_element_by_xpath('.//div[2]').text.split(' ')[1].split('/')[0]

item['education'] = info_primary.find_element_by_xpath('.//div[2]').text.split(' ')[1].split('/')[1]

item['company'] = info_company.find_element_by_xpath('.//div[1]').text

comp=info_company.find_element_by_xpath('.//div[2]').text.split('/')

item['industry'] = comp[0]

item['finance'] = comp[1]

item['company_size'] = comp[2]

item['logo'] = info_pic.find_element_by_xpath('.//a/img').get_attribute('src')

item['recruiter'] = info_type.text

item['benefits'] = info_fuli.text

#print(item['title'])

output.append(item)

#获取页面元素

if page.get_attribute('class')=='pager_next ':

page.click()

page=driver.find_elements_by_xpath('//*[@id="s_position_list"]/div[3]/div/span')[-1]

else:

loop=False

output=pd.read_json(json.dumps(output))

output.to_csv('./data/data{name}.csv'.format(name='_'+selected_city[i]+'_'+word),index=None)

driver.close()

driver.quit()

if __name__ == "__main__":

main()

本文仅做技术学习分享,数据请勿商用,如对拉勾网有影响,请联系本人即删。